- 25 Nov 2025

- 15 views

- 1 posts

Digital discovery used to be linear.

A buyer searched a keyword, scanned a list of blue links, skimmed a few blogs, and filled out a form if they wanted more information. In B2B, this predictable flow shaped the entire playbook for content, SEO, and demand generation. But that world is fading.

Large Language Models actually are rewriting how buyers learn, evaluate, and choose solutions. Instead of browsing websites, they're asking AI for summaries, comparisons, and recommendations. Instead of having to read whitepapers themselves, they get insights synthesized. Instead of clicking through content funnels, they get direct answers.

For B2B brands, that's the single biggest shift in digital discovery since the invention of Google — and it's already affecting visibility, buyer journeys, and how content performs.

Here's how LLMs are disrupting discovery, and what B2B marketers must do next.

1. Assistants Are Becoming the New Search Engines

When a buyer asks an LLM:

What are the best CRM tools for mid-market teams?

The model's answer becomes your new ranking system.

LLMs don't rely on backlinks or keywords. They rely on:

- Semantic relevance

- Data quality

- Credibility signals

- Structured and consistent information

- Model-trained knowledge snippets and retrieval

If your brand is not part of the model's training dataset, or its real-time retrieval, you might not appear—even if you rank well on Google.

This creates a discovery gap: many B2B buyers are finding answers without ever landing on a website.

2. The Buying Journey is Collapsing Into a Single Conversation

Traditionally, a B2B buyer might:

- Google a keyword

- Read top-ranking blogs

- Visiting vendors' websites

- Compare features manually

- Download resources

- Join sales calls

With LLMs, that becomes a single, multipurpose interaction:

“Explain which ABM platforms work best for SaaS companies.

Compare features.

Provide quotes.

Show alternatives.

Recommend a stack based on company size."

The assistant synthesizes everything instantly — meaning:

- Website content consumption decreases

- Middle-of-the-funnel content gets sidelined

- Comparison pages, listicles, and guides lose traffic

- Buyers reach the sales teams later with more pre-formed opinions

For B2B brands, this means your content must serve AI agents as much as it does humans.

3. Static Content Isn’t Enough — LLMs Prefer Structured, Chunked, Verified Data

LLMs are great at digesting:

- Cleanly structured FAQs

- JSON-LD schemas

- Product spec sheets

- Knowledge bases

- Clear definitions and "what/why/how" explanations

- Short, self-contained passages quotable independently of the rest

Long, unstructured blogs would not likely be used as evidence.

This means B2B brands should reorganize content for machine readability:

✔ Short answer boxes

✔ Clean headings

✔ Clear product data

✔ Well-labeled sections

✔ Consistent naming conventions

✔ High-quality citations

If LLMs can extract your content easily, they will surface it more often.

4. Brand Authority More Important Than Backlinks

LLMs rely on trust signals, which are not just hyperlinks. Authority in this environment is earned through:

- Repeated mentions of the brand online

- Consistent product messaging

- Verified authorship and expert credentials

- Clean metadata

- Positive sentiment across public sources

- Third-party citations

- Transparent documentation

This means B2B brands with stronger credibility footprints are more likely to be included in the recommendations generated through AI.

Backlinks still matter, but brand validation matters more.

5. LLMs Will Change How Buyers Evaluate Vendors

Before LLMs, buyers compared vendors by reading lengthy pages or analyst reports.

Now evaluation looks like:

"Compare HubSpot to Zoho for a 50-person sales team.

Give me a pros and cons list for top SOC 2 compliance software.

Which lead generation tools will work best for B2B SaaS on a low budget?"

Models pull and merge content across the web. If your positioning isn't sharp and differentiated, then you'll be lost in the synthesis.

Brands have to:

- Explain category positioning

- Use consistent messaging across all touchpoints

- Ensure that product features are defined in ways understandable to models

- Publish comparison pages and competitor alternatives

- Feed models the right product language through structured documentation

Your differentiation now needs to be machine-readable, not just human-readable.

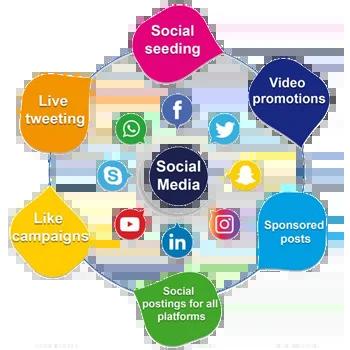

6. LLM Discovery is Shifting Power from Websites to Platforms

B2B buyers increasingly discover insights through:

- ChatGPT plugins

- Perplexity search results

- AI-powered browsers

- Vendor comparison bots

- LinkedIn AI summaries

- Enterprise RAG systems

- AI agents embedded in CRMs, ERPs, and project management tools

This means that discovery is moving away from:

❌ Web-first

❌ Funnel-first

❌ Keyword-first

Toward:

✔ AI-first

✔ Context-first

✔ Answer-first

If your brand is not feeding these AI ecosystems with clean, structured, authoritative data, then you are invisible where buyers are actually searching.

7. Content Moats Are Shrinking — Speed and Freshness Are Crucial

LLMs favor recent sources. Stale B2B content goes stale more quickly because:

- Competitors publish better structured pages

- Product updates shift positioning

- AI models prefer recent datasets

Fresh information increases the ranking in AI-assisted search.

To remain visible:

- Update product pages monthly

- Refresh features and pricing tables

- Publish brief, formatted knowledge updates

- Keep an authoritative changelog

- Keep the FAQ and Documentation libraries tidy

LLMs reward brands that stay current.

8. AI-Native Content Strategies Are Becoming a Competitive Advantage

Leading B2B brands are already redesigning their content architecture to support LLM discovery:

AI-Native Content Examples:

- Atomic content blocks designed for retrieval

- Modular product documentation

- Machine-friendly FAQs

- Schema-rich comparison tables

- Community Q&A with verified answers

- AI-ready customer stories with clear outcomes

- Structured datasets for models to ingest

This is a shift as important as the early move to mobile-first content a decade ago.

9. The Next Frontier: AISO - AI Search Optimization

LLM-based search calls for a new discipline: AI Search Optimization, or AISO — the practice of optimizing content for LLM discovery, retrieval, and citation.

AISO is built on:

- Semantic clarity

- Trust signals

- Structured answers

- Clean metadata

- Consistent product terminology

- Multi-format content: text, transcripts, structured data

In the AI-driven discovery landscape, AISO becomes the new bedrock of visibility for B2B brands.

What B2B Brands Must Do Now

Here's the actionable playbook:

1. Re-architect the content for LLM retrieval

Divide content into AI-friendly chunks, using clear headings and brief answers.

2. Bolster digital authority

Invest in thought leadership, citations, PR, and expert-driven content.

3. Create or enhance your knowledge base

Make it structured, scannable, and model-ready.

4. Be transparent.

Publish pricing, features, FAQs and technical specs openly.

5. Own your positioning

LLMs amplify whatever message best fits your category and competitors.

6. Adopt AISO

Treat AI discoverability as a core growth channel.

Conclusion: B2B Discovery Has Changed — Forever

With LLMs changing the way buyers search, compare, and evaluate vendors, discovery no longer happens on your website; it happens inside the AI models themselves, which can summarize, synthesize, and recommend at incredible speed.

B2B brands will have to transition from SEO-first to AI-first strategies where clarity, structure, authority, and freshness drive how often an LLM recommends your product.

In other words, the next era of digital discovery will be dominated by the brands that adapt early; the ones that wait will disappear from the conversation altogether.